The Hidden Platform Lesson Behind Airbnb’s Data Quality Framework

A Blueprint for Smarter Platform Engineering

A few years ago Airbnb was drowning in data bugs that broke trust in their metrics. Their solution wasn’t a new model. It was a platform framework called Wall that cleaned up pipelines and made data reliable again. In today's CloudPro, we unpack what every platform engineer can learn from it.

Cheers,

Editor-in-Chief

AI Powered Platform Engineering Workshop

Most platform teams hit the same wall: tools pile up, self-service breaks down, and platforms get rebuilt every 18 months.

On September 16, join CNCF Ambassador Max Körbächer for a 3-hour live workshop on how to design internal platforms with AI baked in.

Use Code: LIMITED40

The Hidden Platform Lesson Behind Airbnb’s Data Quality Framework

A Blueprint for Smarter Platform Engineering

A few years back, Airbnb hit a painful truth: a single data bug could quietly poison dashboards, mislead teams, and steer decisions the wrong way.

To deal with it, Airbnb launched a Data Quality Initiative. The company rolled out Midas, a certification process for critical datasets, and made checks for accuracy, completeness, and anomalies mandatory.

It sounded good on paper. But in practice, it quickly turned into a mess.

Every team wrote checks in their own way: Hive, Presto, PySpark, Scala. There was no central view of what was covered, and updating rules meant editing code in a dozen different places. Teams duplicated effort, each building their own half-complete frameworks to run checks. And pipelines grew heavy: every check was an Airflow task, DAG files ballooned, and false alarms could block production jobs.

Airbnb needed a better path.

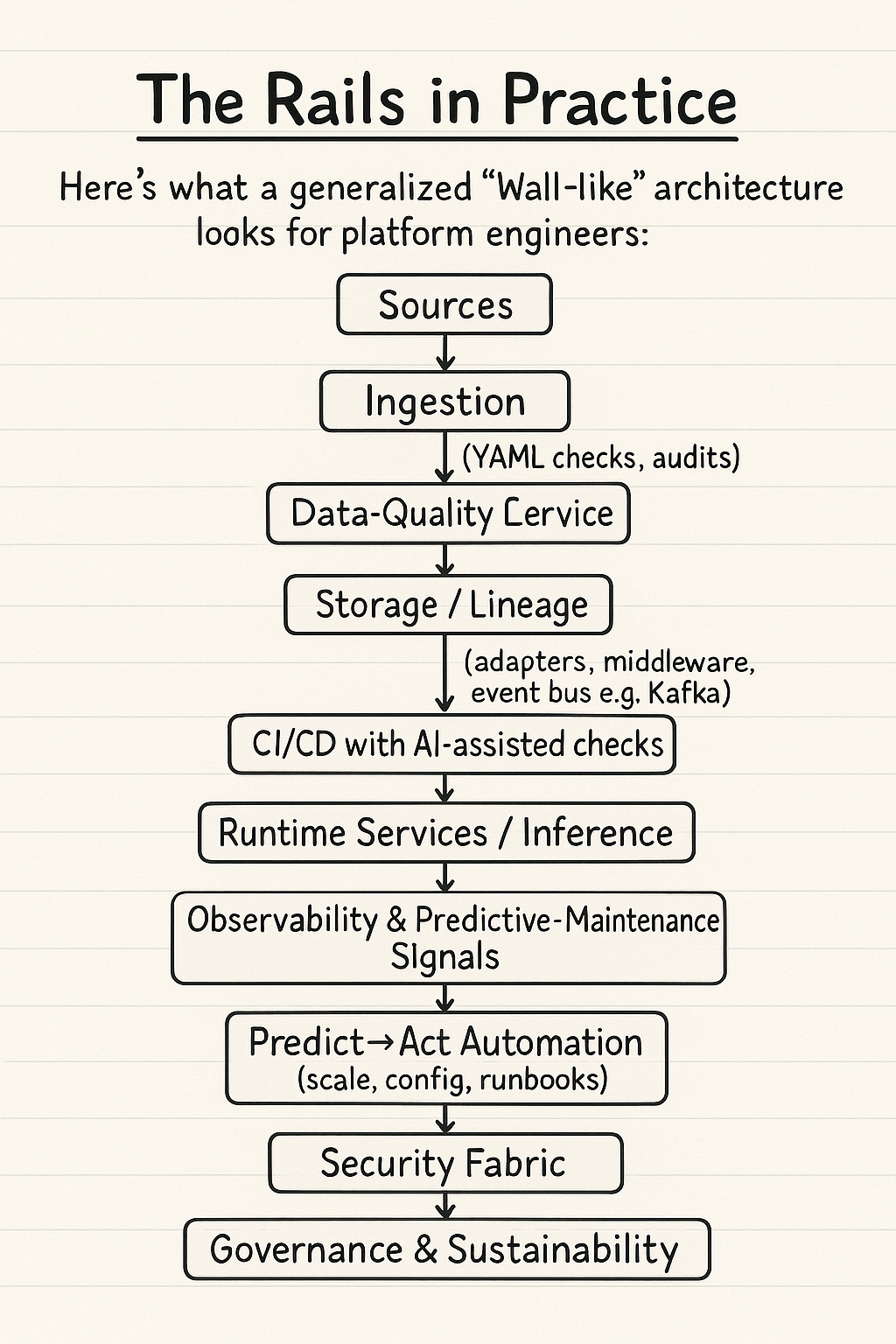

So, they built Wall, a single framework for checks. Instead of custom code, engineers wrote checks in YAML configs. An Airflow helper ran them, keeping logic separate from pipelines. Wall added support for blocking vs. non-blocking checks, so minor issues didn’t stop critical flows. And instead of burying results in logs, it sent them into Kafka for other systems to consume.

The results were dramatic. Some pipelines shed more than 70% of their Airflow code. Teams stopped reinventing the wheel. Data-quality checks went from fragile and inconsistent to a paved path everyone could rely on.

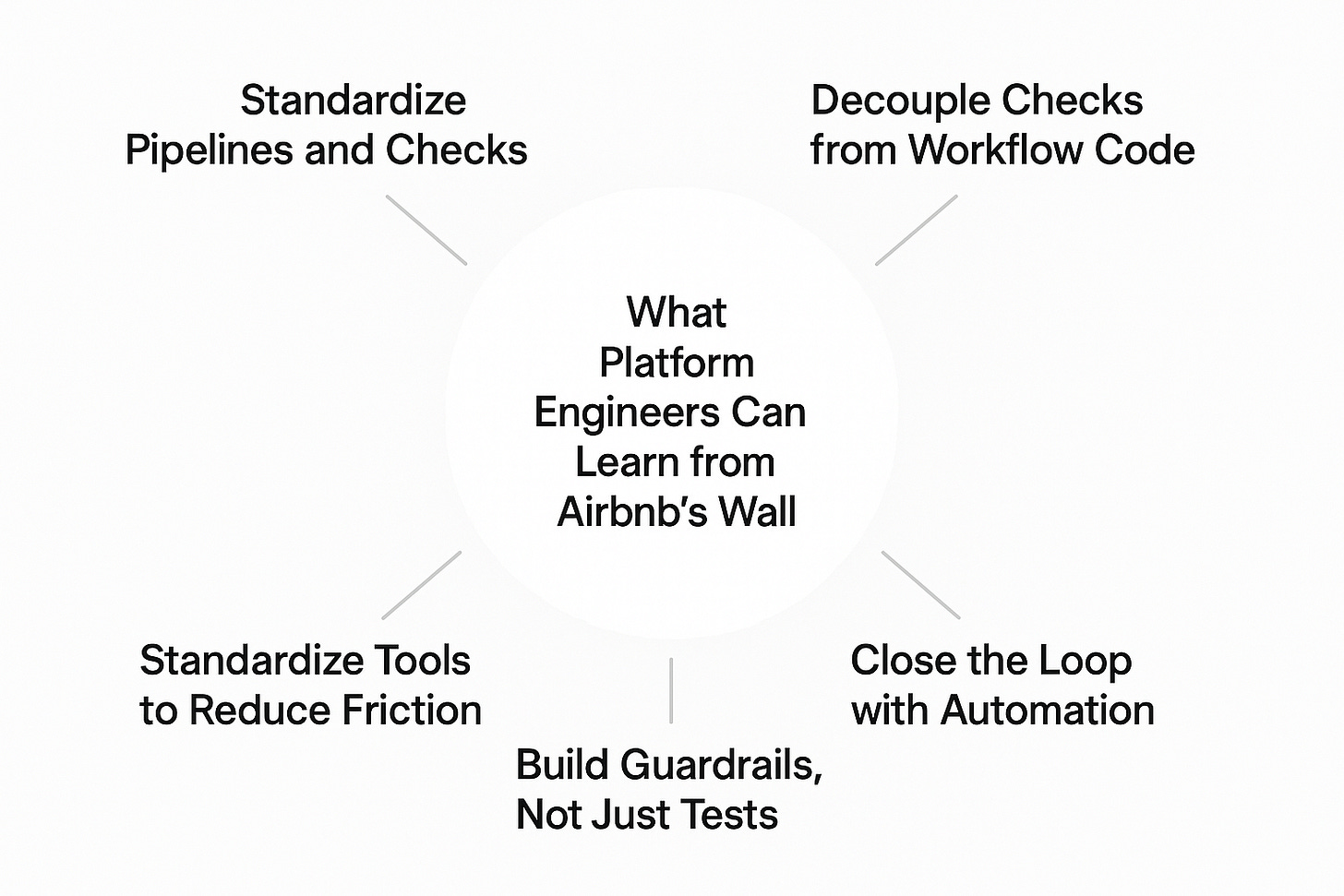

What Platform Engineers Can Learn from Airbnb

1. Standardize Pipelines and Checks

In Mastering Enterprise Platform Engineering, authors Mark and Gautham makes a simple point: reliable AI doesn’t come from choosing the perfect model. It comes from the rails underneath: pipelines, integration layers, automation, and guardrails. The numbers are striking: clean pipelines alone can improve model performance by 30%, audits can cut errors by 90%, flexible integration can halve deployment time, and predictive automation can reduce downtime by 50%.

Reliable AI starts with consistent pipelines and repeatable checks. When pipelines are clean and quality checks are routine, the entire system gets more predictable. That’s why standardized checks and audits can boost accuracy so dramatically.

Airbnb learned this the hard way. Each team had its own approach to checks, spread across different engines. The duplication and inconsistency created constant drag. Wall fixed it by moving to YAML-based checks in a single framework. Suddenly, teams were speaking the same language. Some pipelines saw their DAGs shrink by more than 70%.

2. Decouple Checks from Workflow Code

One of the biggest risks in complex systems is tangling logic together. When validation lives inside workflow code, every change increases fragility. By pulling checks out into their own layer, you gain flexibility, reuse, and resilience.

Wall embodied this. Instead of clogging DAGs with checks, it made them independent services. Results flowed into Kafka, where other systems could consume them. Checks weren’t bound to pipelines anymore; they became a decoupled, reusable rail.

3. Close the Loop with Automation

Validation without action is just noise. The real value comes when checks automatically trigger responses: scaling a service, blocking a bad job, or notifying the right team. This kind of predict→act loop is where platform engineering proves its worth.

Wall pushed Airbnb in this direction. By publishing results as Kafka events, checks could plug into downstream tools that acted immediately. Instead of waiting for humans to parse dashboards, the system closed the loop itself.

4. Build Guardrails, Not Just Tests

Not every failed check should bring the system down. The right approach is to design guardrails: rules that let you decide what’s a blocker and what’s not. This keeps the platform safe without making it brittle.

Wall introduced blocking vs. non-blocking checks to solve this. Critical issues stopped the flow; minor ones didn’t. That simple design choice turned fragile pipelines into resilient ones. Guardrails, not tests, are what kept the system trustworthy.

5. Standardize Tools to Reduce Friction

Every extra framework, every redundant tool, adds friction. Engineers spend more time maintaining and less time building. The fix is to standardize on a common set of rails, even if it means trade-offs.

Airbnb saw this firsthand. With every team writing their own frameworks, they were duplicating effort and missing features. Wall gave them a single standard, cutting out wasted work. Once engineers stopped arguing about how to check data, they could focus on using it.

This walkthrough was adapted from Mastering Enterprise Platform Engineering and connects to Packt’s AI-Powered Platform Engineering Workshop on September 16.

It’s a live, 3-hour session led by CNCF Ambassador Max Körbächer, focused on how to build internal platforms with AI baked in: platforms that stay usable, scalable, and sustainable.

CloudPro readers get 40% off tickets for the next 48 hours.

Use Code: LIMITED40